The future of your code is no-code.

Is your codebase being reduced every day?

Tl;dr: We argue how with LLMs we need to shift our software development practices to accommodate for pseudo-deterministic ephemeral code. We deep dive into the challenges with vibecoding and a potential model for software development allowing for Agentic code generation with every run.

Since the introduction of High-level programming languages (HLPL), Software Development has evolved throughout the years introducing new frameworks, repositories, IDEs, and services meant to reduce the complexity of creating new software. The core precept that has guided this evolution is dual: a firm idealized belief that software provides means to solve a problem once, and for all, and a pragmatic realization that the world is always more complex than you could imagine, leading to increasing challenges. Complexity compounds while software eats the world. The reigning belief for the past 50 years has been to simply re-utilize as much as possible, which leads to specialization of software.

Particularly in these past two decades, we’ve seen SaaS and global repositories, like npmjs, become key. Nowadays we don’t implement their own feature-flag solutions, ORMs, or RBAC systems. In fact, multiple startups have been created with tens of engineers, instead of thousands, as a result of this, and it doesn’t end there. Many businesses don’t have a tech team, however they do have their own ecommerce and social media software strategies based on services like shopify or instagram for business. The landscape keeps shifting and adapting to that landscape has been a trademark for our profession.

Most experienced Software Engineers took those notions at core, which lead to two shifts in the last years:

It became a lack of seniority trademark to develop solutions from scratch.

There are things that you simply don’t do, unless it’s your team’s sole objective, e.g. creating a UI component library, an ORM, or implementing a search algorithm. Actually seniority is defined as refining systems to decide which providers/libraries to use, and that requiresKnowing your core business tech advantage, a must for great Tech leaders.

Long gone are the days in which technologists’ value was locked on knowing the intricate details of a codebase (check the Phoenix Project! Nowadays this is detrimental). For the past decade, it has been always about identifying early on where to focus tech efforts, and delegate the rest. Great Tech leaders are able to place the right bets so that the software they produce becomes key leverage to the business. Their software is core to the value proposition of their business.

Vibe-coding is the ultimate expression of these precepts, and that’s why you should embrace it everywhere. In particular, two things happen simultaneously:

You can afford to generate code faster than ever,

Your applications can mutate themselves based on user input.

Almost everyone is focusing on (1), very few are exploring (2), and most start-ups are focused (3). Three is the easy path forward, providing infrastructure to all, and skipping the conundrum altogether.

We argue here that you should start leveraging, buying and building, solutions that follow an approach closer to (2). We make a point on why (1) keeps adding tech debt in your codebase, one of the most insidious challenges in this new world. While (2) is actually providing a new HLPL language to your customers, what the industry has been calling no-code/low-code solutions, now in human language.

First: Vibe-coding is, unfortunately, still coding.

You could think it’s funny, sad, glorious or a condemnation, but vibe-coding is still coding. In fact, <rant> it’s a bit worse than old fashion coding, you still need to debug & test – which I really procrastinated on – and you don’t get to write those cool double for-loops any more</rant>.

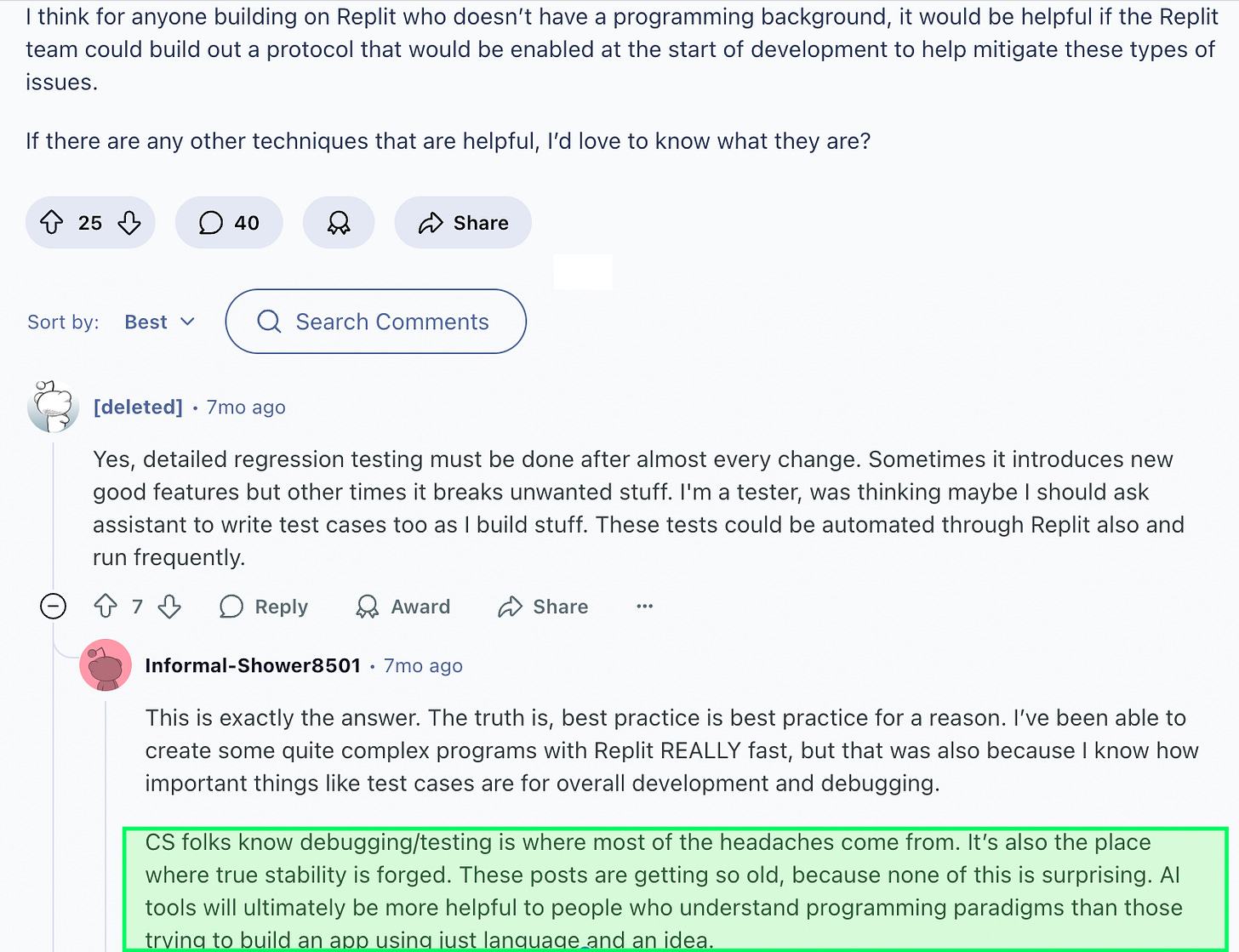

Actually most vibe-coders are experiencing the same problems any software engineer team had before: lack of security, monitoring, stable releases, “wasting” time in QA, etc. And that has a simple truth: they are generating code without knowing how to manage code.

The main issue for mature tech teams is a bit different, though, it’s that the code generated stays there, possibly forever. Unless you decide to dedicate part of your team to prune your codebase, which humans are historically bad at, the ratio at which you’ll be introducing new forgotten features has grown exponentially. Yes, you now can afford to introduce a new set of features per customer, it also means you’ll end in 2 years with a codebase equivalent to that one that was produced before in ~20yrs with a similar team.

This is a challenge not just for the size, and complexity of the code itself, but also because it means you have a fraction of the time to implement all the measures that came before with decades of maturity. That will take a toll, and you’ll likely end-up investing on:

Agents that clean the codebase

Agents that remove unused code-paths,

Agents that remove unused features,

Monitoring, telemetry, etc, those things that you needed with a team of 100 before.

The feeling would be that of a software company that is continuously deprecating their software and reducing the team. Having seen this in the past, the constant burden of maintenance 80% of the time, instead of the classic 20%, I know leads to productivity issues.

So how do you start building systems that don’t need to rely on so much code?

Second: Prompts! are still non-deterministic in nature.

“Code should self-improve with every run”

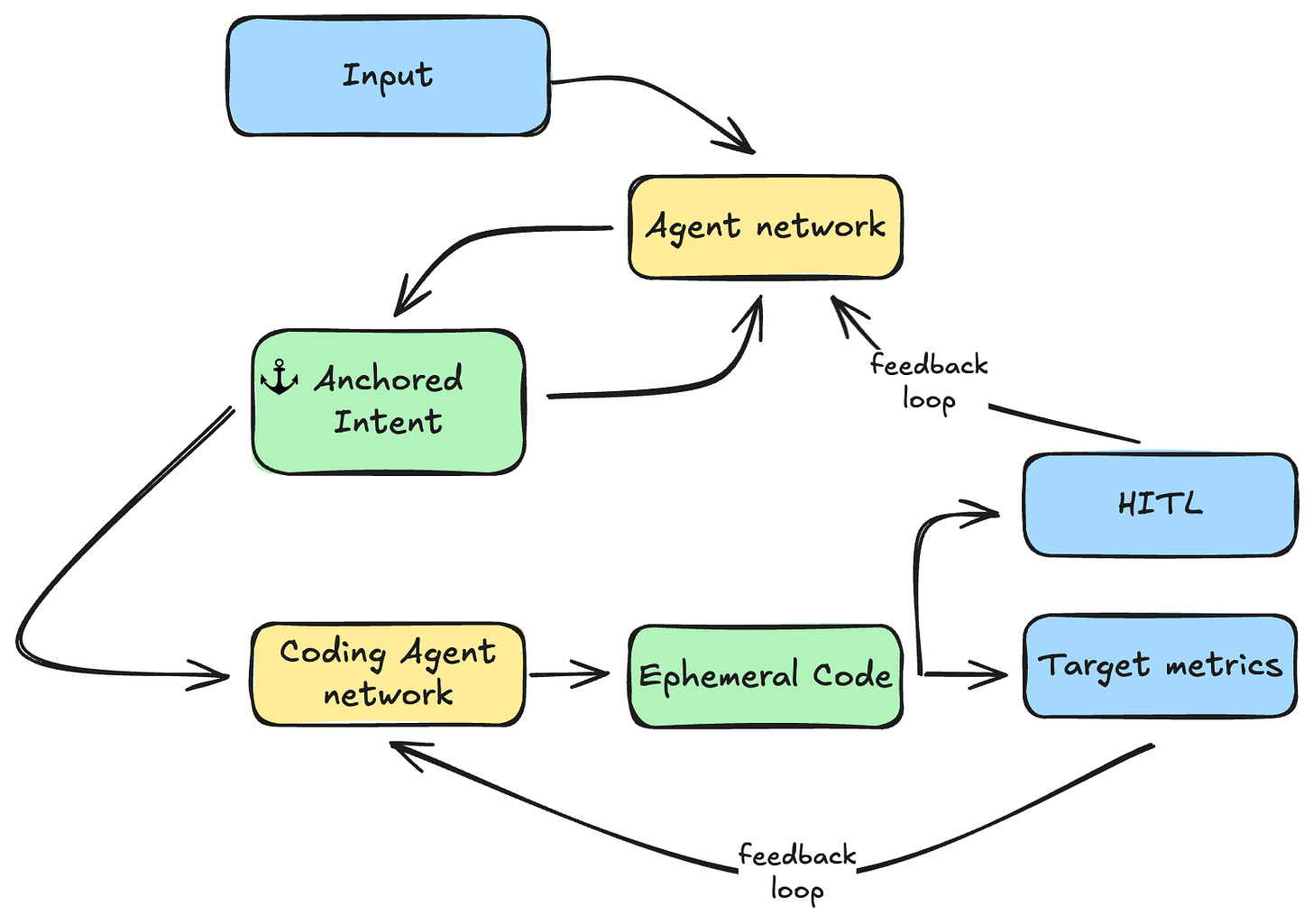

This has been the main tenet since day one at desplega.ai. The challenge was to adapt and rely on the non-deterministic nature of prompts. Basically, you need to generate code that is stable enough so core areas of your software work as your users expect, and knowing what they expect is always key. Because users will anchor their expectations to some version of the software ( e.g. even if the functionality provided is equivalent, a human will get bothered if buttons change color every time), we decided to use the term ‘anchoring intents’ to denote what we need to be deterministic.

We concluded that whichever strategy we followed, we needed to allow for (a) custom code and (b) a way to anchor certain properties or characteristics down to the detail. If you end up using custom code for (b) then you run the risk of having just code, and that’s something we aim to avoid.

To solve for this, we’ve been working with the following high level flow:

Human-in-the-loop to anchor behavior is an unavoidable requirement, that is either a user action, a PM review, or a Team meeting; the system needs a confirmation. With this strategy your development efforts are now centered on Agents whilst the rest of the team (UX, Product, QA, etc) are still working on their day-to-day customer centric view. If you think about it, you have just done a major infrastructure update that will keep updating itself, and luckily reduced your codebase in the process.

Note

With enough expertise you could easily go and adjust the anchored intents manually, however these are usually bloated prompts with very detailed descriptions that make it so the system is deterministic where it matters.

Third: Expertise still is the key differentiating factor

Just in this high level example, you can see how if your objective was to build an e-commerce platform, you would need to fine-tune at least:

A coding agent

You need a coding agent that knows your technology stack, business principles, design principles and limitations profoundly. It needs to know AWS/GCP, ORM, JS framework, Payment rails, tracking system, etc.An observability agent

To manage your monitoring, traceability, experiments, etc. A constant oversight on your infrastructure and monitoring production before an incident escalates. It needs to understand your system signals, what systems to interact with, the options at its disposal, and when to alert who and how.A set of agents to handle communications

Internally and externally, a wide range of agents supporting customers, employees, and prospects. This is your most revenue sensitive set of agents, and maybe the most relevant one,An agent to ensure your quality upon delivery

Whenever you put your system out there, it needs to work better each time. Either a Web UI E2E testing agents, APIs, evals or all the previous ones, to ensure your application as a whole works as expected for all your customers, all the time. You can focus on the first 3, that if your quality is poor, no-one will use your product.

Each of these agents mentioned above deserves special attention, and potentially a team of individuals working on it. This is why expertise is still needed in each area, and you should find out what’s the right way to get that expertise: in-house or not. You’ll always need someone in your team to review the configurations, and manage these agents, however you may not need someone in your team to develop this agent’s infrastructure in each case. Think of how you pay for DataDog, NewRelic or Grafana, each of the agent networks above would be an equivalent service.

In our e-commerce example, you may consider your products and your network effects are the key competitive advantage, bundled with your unique user experience. In that case, the scope for your team is around controlling these agents, more than creating that infrastructure, and ‘anchoring’ a particular voice and UI. They need to do it exceedingly well, so that you don’t lose the raise against the next e-commerce.

Note

The idea of learning and re-generating the code based on usage, means that majority of the work would be around having a tight feedback loop that allows your agents to regenerate the ephemeral code upon issue discovery to improve a target metric, for example network performance, FPS, etc. Setting up and managing the feedback loop is going to be the majority of the work, and why you need to be conscious there’s an exploding need of better quality solutions, i.e. the quality bar will keep rising.

The-one-thing: Code should be ephemeral

Remember those old days of C/C++, Cobol, Pascal, Assembly, and Fortran, vs Java, Python, C#, RoR, JS, PHP, etc. Their VMs and interpreters may be slower, but the time savings and broader support justified the change immensely.

Whatever solution you use, or create, it should be one where the code is ephemeral, intents are permanent. There’s no reason to see it differently this time, this is simply the next leap.

Leap first