Practical Ephemeral Code with Imploid.

Trying to imploid without imploding.

Tl;dr: In my last post we discussed how to work at the next level, this is a practical setup using imploid & qa-use mcp. [repo]

Overview

Upon my disappointment with the new Cursor’s plan mode, and a great chat with Sergio Espeja, I decided to give imploid a go; here the lessons learned.

I’ve been using Cursor for well over a year now, and Claude Code for the past 3 months. Both tools have their good, bad and ugly, and learning the caveats on when they are more effective takes a significant amount of time. Furthermore, the setup is the killer, it takes several weeks of trial & error to understand exactly how to most effectively use each LLM, learnings which are not cross-compatible. Let me give you three examples:

+ Jose Valim, creator of Elixir, discussed how for his new project tidewave.ai this is a core part of their added value (great podcast!). Even if they work in a bring-your-own-apikey (BYOK) for LLMs fashion, their actual value is on how they use those LLMs calls. He also makes a point on how the most cumbersome challenge to tackle is testing, something that he doesn’t have the time right now to address. It is clear for him we are moving to the realm of conceptual challenges, more than ‘how to do it with code’

+ Kieran Klassaan from Cora has a similar perspective on the skills needed to run these networks of Agents when talking about Compounding Engineering. He says it in a simple way: whatever works for you, and then shows an amazing set of detailed agents that he has created specifically for his org.

+ Dex Horty, founder of Humanlayer, goes further into how disrupting adopting key patterns could be for an individual. In fact, he actually points out how difficult it is to get beyond that 80% solution that gets actually your product worth it. (watch it!)

If you are half as lazy as I am, you would understand by now why I got excited with Imploid. I love the end result, I would love to contribute, I don’t know if I want to maintain it, though. The same way that I love my coffee, and I would never roast it myself. I have other things to attend.

So, how did I do it?

First: Docker, Docker, Docker

If you think I would go the easy way, and simply install the npm package and wish for the best… you may not know me yet. After so many years as a developer I learned that the easy way is usually the risky way, and I really didn’t want some LLM deciding it would be nice if they had more space in my harddrive and proceeding to empty all my precious downloads. Thus, I follow the standard setup:

(a) Checked out the imploid repo into my repos folder.

(b) Created a new repo, docker-imploid,

(c) Asked very kindly to claude-code if it could review the imploid repo and create a configuration for my Docker. It didn’t work… obvs.

A few lessons learned that should help you save time:

Imploid runs claude with ‘--dangerously-skip-permissions’, hence

Your docker runs with a non-sudo user by default.

Claude code only allows you to use this option if you are not sudo… thank you anthropic!You need to override your claude binary so it includes an mcp config command. I simply created a wrapper for claude-code, see below.

# ~/bin/claude

#!/bin/bash

# Claude wrapper to always load MCP config

MCP_CONFIG=”$HOME/bin/mcp_config.json”

# Check if this is an MCP management command

if [[ “$1” == “mcp” ]]; then

# Pass through MCP management commands directly

exec /usr/local/bin/claude “$@”

fi

# For all other commands, add --mcp-config if the config exists

if [ -f “$MCP_CONFIG” ]; then

exec /usr/local/bin/claude --mcp-config “$MCP_CONFIG” “$@”

else

exec /usr/local/bin/claude “$@”

fiGit will use your SSH keys by default

It’s a bit misleading, but your gh token is only useful for gh, so it’s important to make sure your docker exposes your ~/.ssh directory and does the proper mappings. Personally, I have several ssh keys, so this was a nightmare.Imploid expects a setup.sh file in your repo’s root

Simply rename your setup script accordingly. It’s good practice, and naming convention, so do it!Map ~/.imploid to your docker directory – that docker-imploid repo I mentioned above.

That way you can keep track of what’s going on in your agent’s activity. Personally, it made it easy for me to set all my .env variables and also run the system locally when needed.

I know it reads easy, but it took Claude & myself an hour of furious debate to agree on the strategy we followed, and I was extremely happy to find out the decisions were the right ones once I started debugging.

Finally, remember once you have docker up and running, you need to do two things:

docker-compose exec <instance name> bash, and then:

npx imploid@latest –config

npx implid@latest –foregroundSecond: It works, It works!

I dipped my toes into the Imploid workflow with three tasks of varied complexity:

Easy - Change the default behavior of a filter.

This was my first change, and the PR was flawless, it felt like I could delegate those intern bugs without giving it a second thought. I simply ask two things:Make the filter disabled by default, and

Preserve in local storage the user selection.

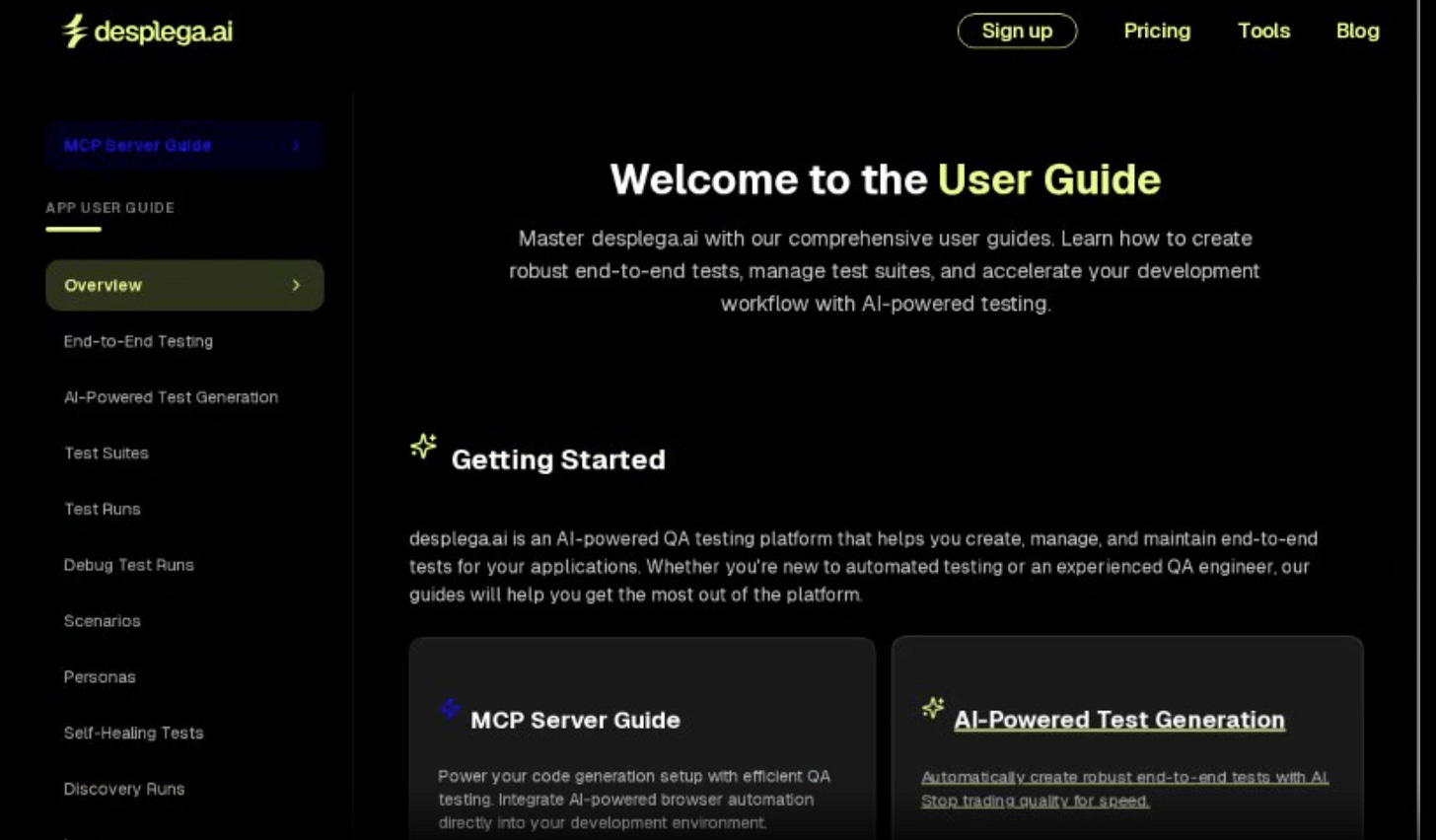

Medium - Add a new floating menu on the left side of our how-to’s

This is a non-trivial UX change that requires the agent to understand how our how-to section is structured, all the subpages, how I personally want the floating menu to be displayed, and it’s open ended enough that chances are it would fail… it didn’t!For this task, it was essential to get visual feedback without needing to actually run the branch locally. This is when having a way to do end-to-end tests remotely came handy. More in the next section.

Hard - Translate my website to Spanish.

I took my landing page, and I simply ask it to translate it to Spanish in a new tree under /es/.

At this point I ran into my first hiccups. Claude didn’t feel comfortable translating text for me. On top of that given the size of the task (Translate 50+ pages), the agent decided the task was too big and suggested partitioning it in chunks, and suggested I create multiple tasks at a higher level.

It felt like I was being asked to do something that was part of the planning, but once done, it proceeded without much issues. I ended up doing the following:

1) Task to do a setup for a ‘Spanish web site’

2…n-1) Multiple tasks translating different subdirectories of content.

n) A task to make sure the /es/ site linked only to /es/ pages.

By the end, it was clear that the translation task was beyond claude’s scope. I opted for halting the project. If I continue in this direction, I’ll likely use a different LLM to translate the texts.

Third: How are you going to test this?

A nice little thing by Imploid is that it defines your unit-tests by default. In fact, with a little fine-tuning, you can get a fair unit-test coverage on the fly. However, if you read some of my posts before, I’m not a fan of unit-testing. They help you with syntactic correctness, creating a false sense of control over the actual business semantics that you want to validate.

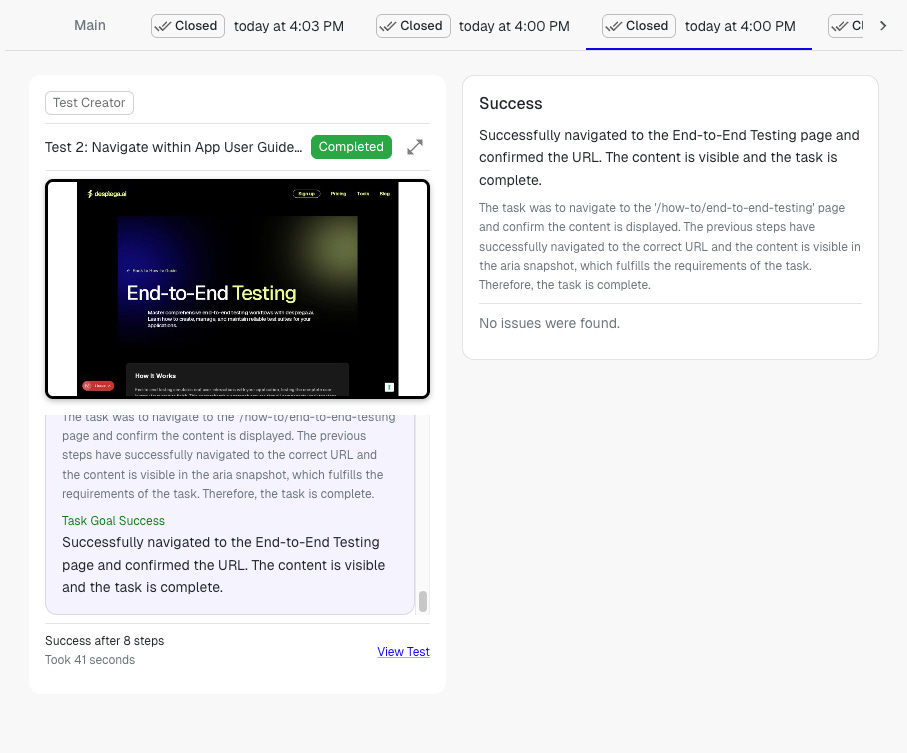

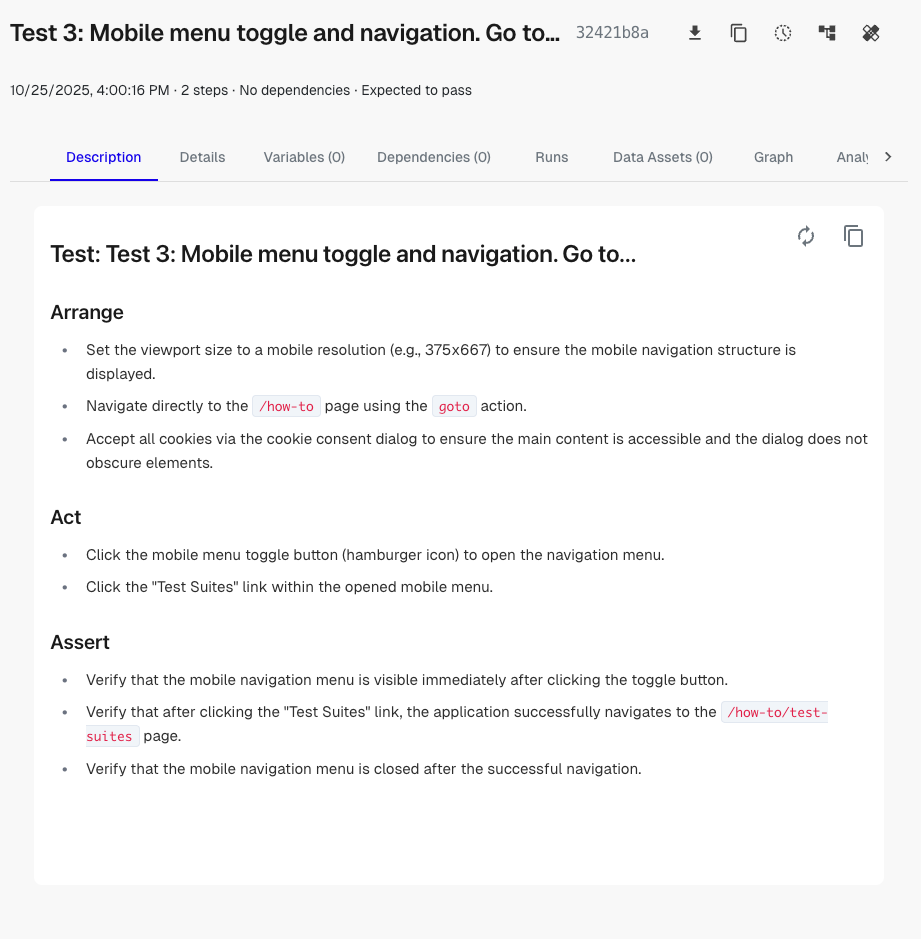

Hence, for the end-to-end experience, I used qa-use-mcp. This actually had 3 great advantages:

Real-time feedback

I’ve got to see in real-time what was happening in the desplega.ai web app; what the agent was trying to test, how it had decided to test the feature, issues it was finding or that were arising.Fully remote

I didn’t need to run the build to verify the functionality.

Because I had the recordings, I could simply check what was done in the e2e tests and verify the UX was the one I wanted. That actually saved me valuable time, because even if the feature was ‘correct’, I could see the flow and decide I wanted to change parts of it.An extra feedback loop

Running the tests also generated feedback for claude code. If you think about it, it was now interacting for the first time with a system that had a different objective, not finishing the task, but actually verifying it was a valid functionality for the context and user persona it had defined.

In my case, I allowed the system to decide what e2e tests to create, so it decided to do a mobile test also. By the end, I already had 3 end-to-end tests ready to be used in staging moving forward.

It wasn’t a perfect experience, but I was able to break through the docker black-box with this little add-on, that otherwise it would have been reading logs and locally running the solution. It’s true it’s not a mandatory step, and in some cases it won’t be difficult for you to run in localhost, however if you are running multiple versions, this truly gets you to be at the next level.

The-one-thing: Give it time

This week Cursor launched their planner, based on similar concepts, and you also have Conductor, HumanLayer, and Tidewave plus many more.

It’s up to you which one you’d choose, always keeping in mind that vendor lock-in and customization are going to be your biggest challenges moving on.

Remember those old days of C/C++, Cobol, Pascal, Assembly, and Fortran, vs Java, Python, C#, RoR, JS, PHP, etc. Their VMs and interpreters may be slower, but the time savings in developer time justified the change immensely. There’s no reason to see it differently this time, this is simply the next leap.

Take the leap.